New Delhi: In Amazon’s latest push in the rapidly growing AI landscape, the company is set to invest $110 million into its new program, Build on Trainium. This new program aims to advance research in generative AI and will support researchers, academic institutions, and students. In this vein, Amazon will provide free computing power to those who will use Trainium, the specialized deep-learning AI chip of Amazon Web Services. This ties in with the core of the build-on Trainium program, which includes the provision of up to $11 million in credits for participating academic institutions in the use of Trainium.

The credits will be offered to use cloud data centres so that universities and research centres to run complex AI models on AWS’s cloud infrastructure. In addition to this, individual research projects outside of AWS’s strategic academic partnerships will be able to apply for grants of up to $500,000. As part of Build on Trainium, AWS and leading AI research institutions are also establishing dedicated funding for new research and student education. In addition, Amazon will conduct multiple rounds of Amazon Research Awards calls for proposals receiving AWS Trainium credits, and access to the large Trainium UltraClusters for their research, the company noted in an official statement.

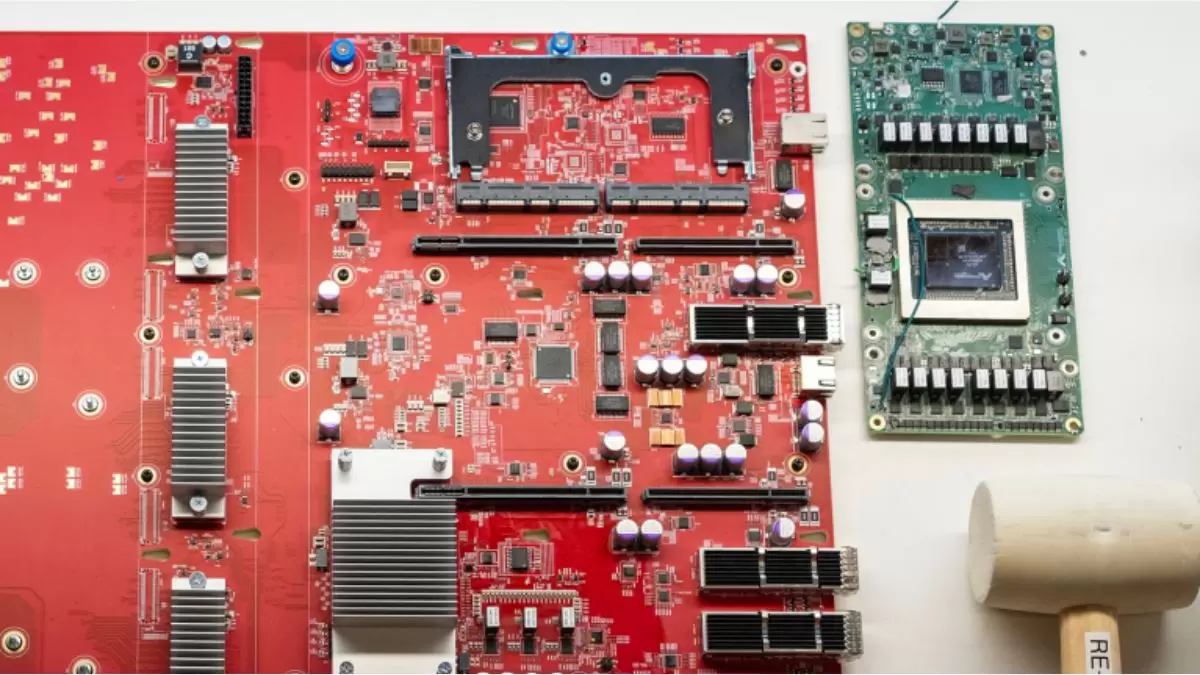

Furthermore, AWS also intends to establish a massive research cluster comprising up to 40,000 Trainium chips, these chips will be made available to researchers and students via self-managed reservations, as well as providing access to the computational power needed to accelerate AI research. Speaking about more Trainium, the chip is designed specifically for machine learning tasks, especially when it comes to deep learning training and inference. It can handle large-scale computational demands of generative AI and machine learning models, thus providing more efficient and cost-effective solutions than general proposed processors.

AWS’s build-on Trainium initiative enables our faculty and students to have large-scale access to modern accelerators, like AWS Trainium, with open programming models. It allows us to greatly expand our research and tensor program compilation, ML Parallelization, and language models serving and tuning, Todd C. Mowry a professor of computer science at CMU, commented on the matter. The Built on Trainium program will also be a foster to collaborative environment for AI researchers, AWS is already working closely with the Neuron Data Science community, a virtual organization led by Annapurna Labs, to connect researchers to AWS’s broader technical resources and educational programs.